Layer multiple overlapping models together to understand the world

/By Duncan Anderson. To see all blogs click here.

Summary: the world is too big and too complicated to understand everything at full resolution. Use multiple overlapping mental models to give yourself a quality understanding!

Almost every idea can be represented as a 3D Model with Pieces and Instruction Set (see blog).

Almost every idea can be mis-represted as 3D Model with Pieces and Instruction Set.

“The best lies are half truths.”

One model can give you a false feeling of a better understanding of the problem space than ‘no mental model’, but one mental can be really dangerous.

False confidence can get you into much more trouble than ‘i have no f!@#ing idea what is going on here’.

If you are only using one model you are by definition an ‘idealog’.

We all have blind spots and ego distortions.

A single model should illuminate some blind spots and ego distortions… but also likely create new blind spots and ego distortions.

Strict adherence to one model / ideology is typically dangerous. Idealog = has blind spots and ego distortions as only one way of looking at a problem space.

So… use multiple complementary overlapping models (2-5x) to explain a given problem space.

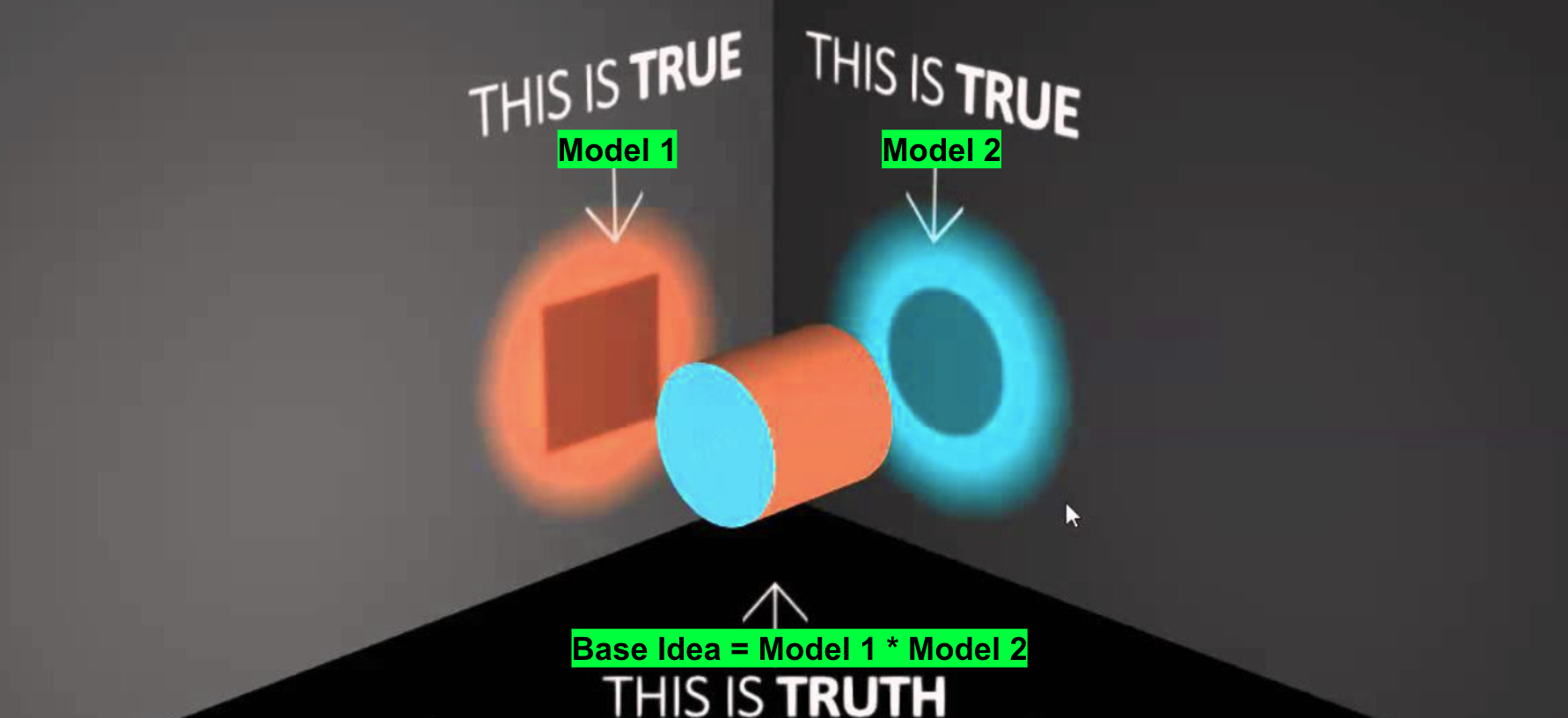

*Aside: I love the above square / circle / cylinder model as an example what happens in messaging around politics.

The left say that ‘it’s a square’. And in isolation when you listen to them it makes sense.

Then you hear someone from the right say ‘it’s a circle’. And in isolation when you listen to them it makes sense.

How can both the left and right make sense on the same topic but often same totally different things? The best lies are half truths. Often it’s not a square, it’s not a circle, it’s a cylinder :)

IMO wanton misrepresentation is NOT OK!

“Please take your circle and take your square and stick them in your cylinder ;P”

++++++++++++++++++++++++

Details

The world is complex, you cannot understand everything at full resolution.

You need to make approximations (mental models) that improve your ability to make sense of the world more than they hinder.

“All models are wrong, some are useful.”

Newtonian physics is an approximation (eg Momentum = Mass * Velocity). It is a model for the world that is not perfect but in many places it is very useful.

Chemistry is an approximation (eg CH4 + 2O2 => 2H2O + CO2). People don’t know if this is true, but it can be a very useful way to understand the world.

“Everything works somewhere, nothing works everywhere.”

Make sure you model (map) fits the terrain. Don’t bluntly fit a model you have everywhere.

"If all you have is a hammer, everything looks like a nail.”

If all you have is a hammer, it’s a recipe for getting sore fingers from using the hammer too much and incorrectly!

Person 1: “Hey I think we need to microwave this…”

Person 2: “No no, I’ve got my hammer, let’s hammer the sh1t out of it. It’s hammer time!”

“The closest possible understanding of reality (ie a true reflection of reality) is the core foundation needed to build a good life.”

If you have a poor understanding of reality then anything you build on top of this ‘foundation’ is ‘shakey’.

“Do not prescribe before you diagnose.”

If ‘all models are wrong, but some are useful’ how do we try and get the best understanding of the world we can? Model on and of models… marvelous :)

L0: not using mental models, just pure intuition.

Jingle: ‘our strategy for doing well is to be lucky’

-L1: using one mental model that doesn’t fit the problem space

Description: is more of a hindrance than a help.

Visualisation:

Jingle: ‘It’s hammer time b1tches! Definitely the best way to make a china vase is with this hammer I have!’

L1: using one mental model that fits the problem problem space.

Description: you get a false sense of confidence as moving from ‘L0: no model’ to ‘L1: 1x useful model’ normally means you find a bunch of blind spots and ego distortions.

Visualisation:

Jinge: ‘look at me, I figured some sh1t out… but pride cometh before a fall!’

L2: 2x models that fit the problem space but don’t overlap

Description: at times I find it can actually confuse me more having 2x non-overlapping models for a problem space than just one as I can’t get a grasp on the overall size of the problem space or the places where an individual model stops working.

Visualisation:

Jingle: I have bought a knife and a gun to this fight. Both are useful… but who am I fighting and where are they?

L3: 2x models that fit the problem space AND overlap (aka compliment each other)

Description: you can start to see a cylinder vs a square.

Visualisation:

Jingle: “I have bought a hammer and a screwdriver, I think we should be able to get this job done.”

L4: 2-5x complementary models that fit the problem space and provide you with a high quality understanding

Description: I understand where the problem space starts and ends, I know here my models start and end (ie their strengths and weaknesses AKA blindspots and ego distortions) and I can layer them together to get a quality 3D understanding of the problem space.

Visualisation:

Jingle: I have a toolbox full of 50x tools, and I’ve picked the right tools for the idea. This means I’m not a tool, but a box full of ways to help your idea!

L3: too many models that mean you can’t understand how to layer them together.

Description: you want the simplest way to understand a problem space. The more moving parts (pieces) the harder it is to join them together into a quality solution.

Visualition:

Jingle: what the smart person does in the beginning, the stupid person does in the end.

Overall Jingle: model your understanding… so you can understand your models

Yes I’m proud of this!

“You don’t know what you don’t know.”

IMO it’s best to assume you are wrong until proven right.

IMO the best chance of having a ‘high quality understanding’ of the problem space is through 2-5x quality overlapping mental models.

IMO it’s best to have ‘external regulators’, ie people that help you know when your head in your cylinder. Constantly cultivate external regulators.

IMO it’s best to have external quantitative metrics that will confirm if your understanding is correct (eg if Edrolo expects to get majority market share in textbooks and we don’t then our understanding is wrong). IMO don’t worry about being wrong, worry about not finding out if you are wrong.

“It ain't what you don't know that gets you into trouble; it's what you know for sure that just ain't so.” Mark Twain.

… wait for it, an overlapping model to explain explaining with models. OMG so meta :)

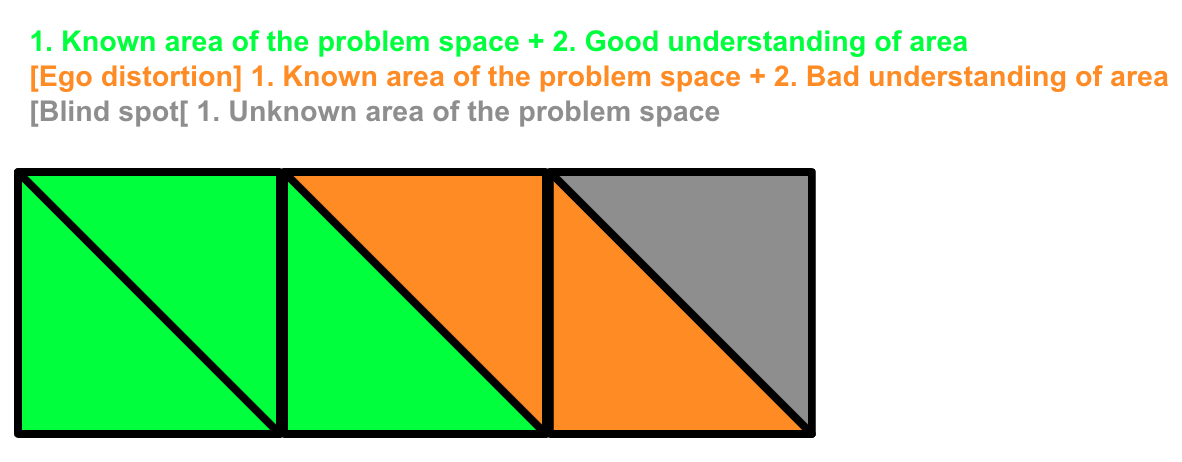

L0: no model so don’t have an understanding of the problem space

L1: 1x model but you have non trivial blind spots (ie incomplete understanding of problem space, ⅓ you are unaware of, ie 1/3 is a blind spot) and you have non trivial ego distortion (ie ⅓ you think you understand properly but you are incorrect).

For the ⅓ with an ego distortion you see a ‘circle’ but it’s actually a ‘cylinder’.

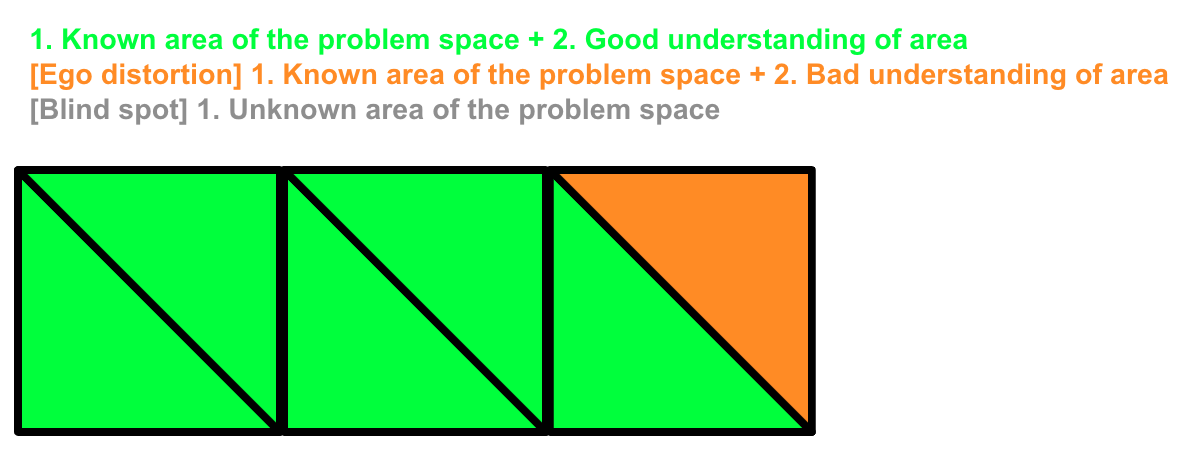

L2: 2x overlapping models.

You decrease blind spots and ego distortions.

L3: 2-5x quality overlapping models.

I think that it’s effectively impossible to have 100% understanding of the problem space with zero blind spots and ego distortions. Ie a perfect reflection of reality… so I go at 90%+ problem space awareness with 90%+ true reflection of this.

Rule 1: don’t fool yourself. Rule 2: you are the easiest person to fool!

Jingle: don’t be a model fool, use models fool!

Yeah I’m also happy with this one!

In search of the next top imperfect model

Sometimes models that help you explain your problem don’t come from your direct problem space

To learn how to teach better, don’t look at the best teachers, look at human behaviour

To learn how to make more money, don’t look at making more of what you’ve got, look at how to problem solve better

I think a good way to find potential models for your ‘runway’ is to find your doppelgangers in a different area of the world and give it a twirl

If you only take one thing away:

If you can’t model your mind you’ll end up in a mental muddle!

One model can will likely misguide you to believe you have meaning (solid understanding)

Multiple (2-5) models layered together will mean your generated meaning is magnificent!